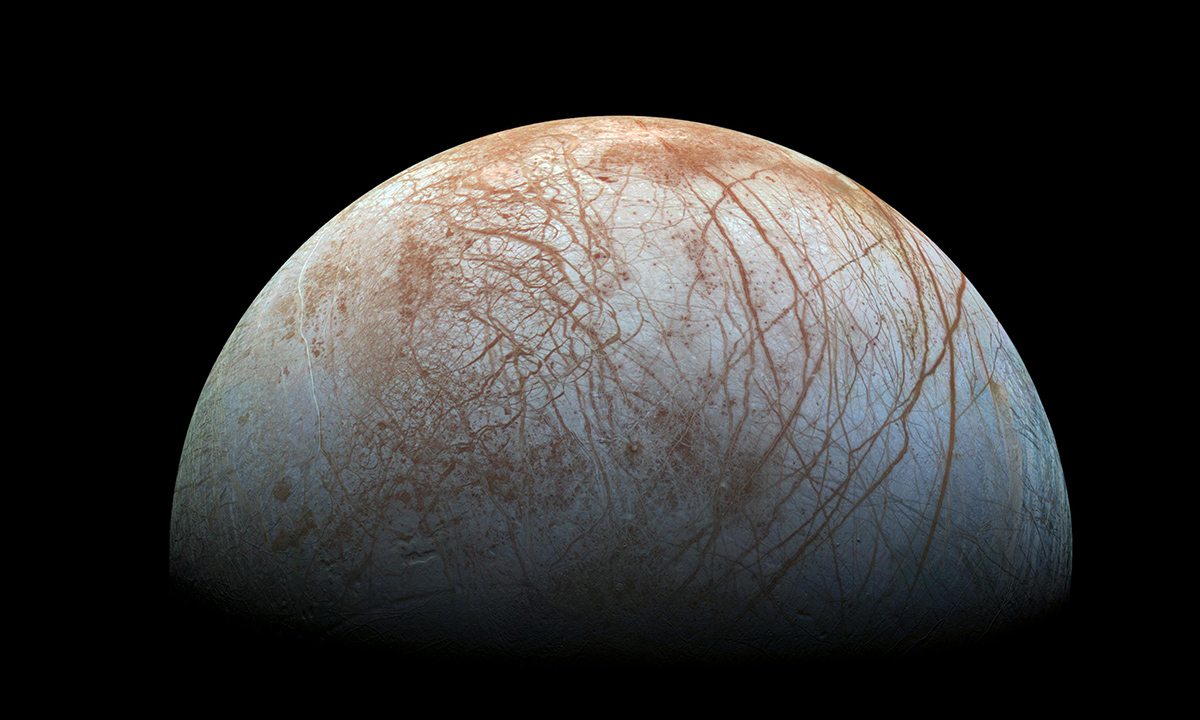

What we are dealing with an image segmentation problem which is a type of computer vision problem in which we try to find each pixel which belong to a particular class. A decision tree is a ML model which ask we ask some "significant questions" to an object and make some prediction about it. The model learns what questions to ask and in which order to ask from some training data. They are easy to use, understand and build but decision trees tend to have a high variance, that is, they can be very sensitive to the training data. A random forest can be thought of as a stochastic version of decision trees. A random forest is build using many small decision trees. Each decision trees in a random forest is made using certain features (chosen at random) from certain datapoints (again, chosen at random). The final result is give by taking a poll in the forest. Image segmentation can be thought of as a classification problem. Where each pixel is being classified into belonging to or not into a class. In our case the class is lineas.

Convolutional Neural Networks are neural networks which are very good at image recognition and Computer Vision in general. It has two parts, a feature extractor which finds different kernals and extracts various information about the image. The kernal-convolved image is then passed to a fully connected neural network which is trained to find out the relationships necessary among the kernals to achieve a particular task.

UNET is a state-of-the-state framework for image segmentaion. A CNN is used to extract features as a first step. However, while extracting features the information about the location of the object is lost which is essential for image segmentation. This information is retrieved by connecting the layers from the feature detector to an expanding path. These skip connections make the UNET special and even though it's been 6 years, it's still used today. It can be used with different backbone encoders. However, we just used the vanilla UNET as mentioned in the whitepaper.

From sk-learn's official website: The DBSCAN algorithm views clusters as areas of high density separated by areas of low density. Due to this rather generic view, clusters found by DBSCAN can be any shape, as opposed to k-means which assumes that clusters are convex shaped. The central component to the DBSCAN is the concept of core samples, which are samples that are in areas of high density. A cluster is therefore a set of core samples, each close to each other (measured by some distance measure) and a set of non-core samples that are close to a core sample (but are not themselves core samples). There are two parameters to the algorithm, min_samples and eps, which define formally what we mean when we say dense. Higher min_samples or lower eps indicate higher density necessary to form a cluster.

In cases where there is a huge imbalance among the classes, algorithms get away by getting scores even after mis classifying virtually all of the minority class. To tackle this problem, one can either oversample the minority class and/or undersampling the majority class. We would like to introduce ROS, RUS and SMOTE. Random Under Sampling is just deleting datapoints from the majority class. As one might guess, this is likely the worst method since one is losing valuable information. Random Over Sampling gets over the issue of losing information by repeating datapoints in the minority class. This is essentially giving them more weight. SMOTE is a better way to oversample data where one creates synthetic datapoints near the locality of minority datapoints. It achives this by finding the k nearest neighbours of each point and creating random points in between the lines joining the nearest neighbours.